Felix Krause

AI Engineer Freelancer

External CTO at Klartext AI

Technical Lead and Co-Founder at AIM – AI Impact Mission

AI Engineer Freelancer

External CTO at Klartext AI

Technical Lead and Co-Founder at AIM – AI Impact Mission

agentic AI, multi-agent, tool use, LLM, RAG, coding

As one of the main organizers from AIM - AI Impact Mission, I helped host our third hackathon focusing on Agentic AI. Participants built multi-agent workflows and AI tools solving real-world tasks with retrieval, tools and autonomous orchestration. Teams explored agent routing, memory, tool integration and evaluation, creating solutions for research assistance, customer support and structured content generation. It was inspiring to see how quickly agentic patterns can deliver robust, useful systems in just a day.

Wiener Zeitung, generative AI, LLM, RAG, media, coding

As one of the main organizers from AIM - AI Impact Mission, I had the privilege of collaborating with the Media Innovation Lab and Austrian Startup Summit on the Hackathon "Put News Archives to Life". We brought together 24 talented participants, challenging them to leverage Large Language Models (LLMs) and Retrieval Augmented Generation (RAG) to unlock the potential of historical newspaper archives provided by Wiener Zeitung - the world's oldest daily newspaper until two years ago. Participants had access to over 87,000 digital articles spanning from 1998 to 2023, developing innovative AI-driven solutions to tackle media-related issues. Teams worked on personalized news formats, promoting democratic discourse, fact-checking, semantic classification of biased versus non-biased news, and information mapping for investigative journalism. It was amazing to witness such creativity and dedication, bridging technology and media innovation in meaningful and impactful ways just within one day of work.

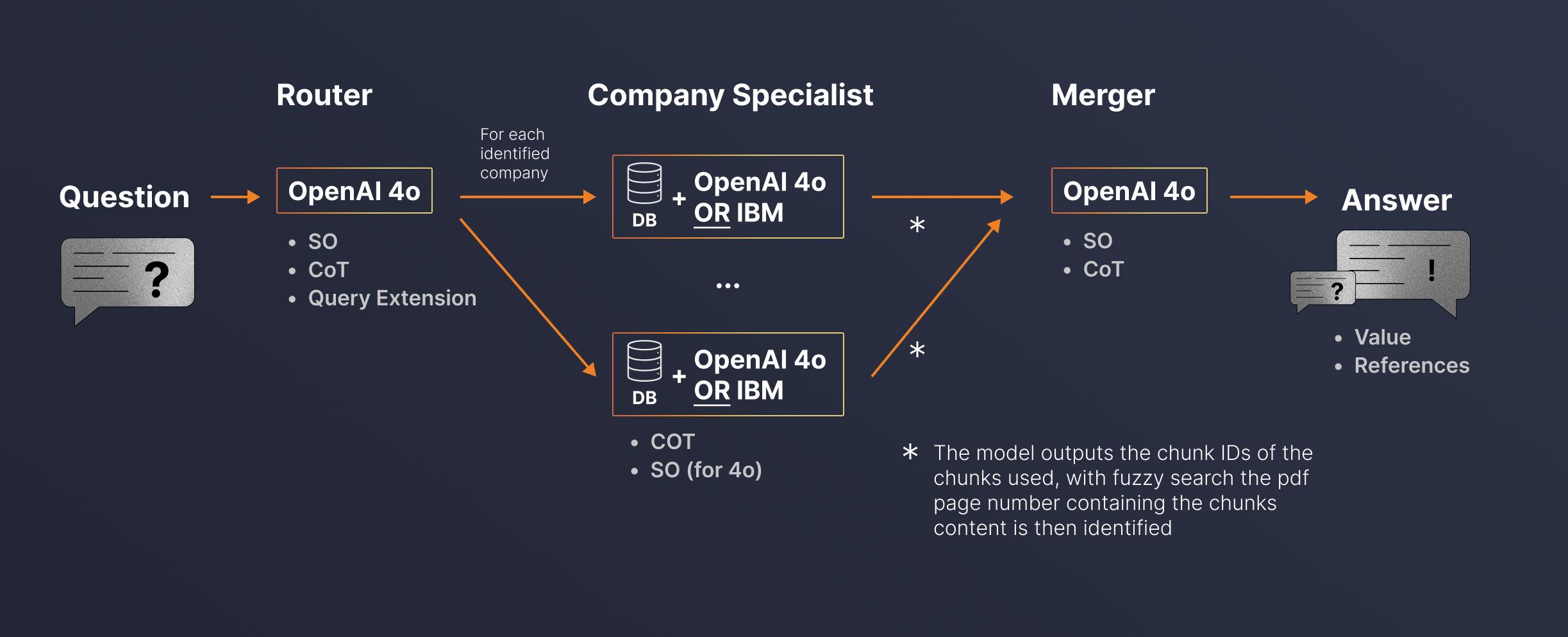

company reports, PDFs, LLM, RAG

At the end of February 2025, I was involved in organizing the Enterprise RAG Challenge by TIMETOACT GROUP Österreich, with the goal to answer complex business questions using cutting-edge Large Language Models (LLMs) architectures on annual company reports provided as PDFs. International participants of 43 teams presented impressive and diverse solutions, each offering unique approaches and key learnings. For a detailed exploration of the winning strategy, I highly recommend checking out the winners insightful blog post linked below. The final interactive leaderboard provides valuable insights into the current state-of-the-art, illustrating which approaches proved effective - and which did not - for PDF-based question-answering tasks. My own submission, which ranked 8th, is a multi-agent pipeline with dedicated company experts, relying on Gemini 2.0 Flash answer retrieval with full PDF in context and ChatGPT 4o-based router and merger agents. Similarly well working was an approach with Docling PDF parsing and Qdrant vector database retrieval using custom chunking. Check out my blog post including reflections, challenges, and key takeaways below. It was rewarding to see such creativity and innovation within the community, pushing the boundaries of enterprise LLM applications.

ESG reports, generative AI, LLM, RAG, coding

As one of the main organizers of the AIM Hackathon "Sustainability meets LLMs" I had the privilege of working alongside an incredible team to create an event focused on impactful AI solutions. We challenged participants to design custom Retrieval-Augmented Generation (RAG) architectures that could utilize real-world ESG and sustainability reports to tackle pressing challenges. The teams came up with impressive solutions, including tools for detecting greenwashing in corporate reports and benchmarking company compliance with EU Green Deal goals. It was inspiring to see so much creativity and commitment to sustainability in action.

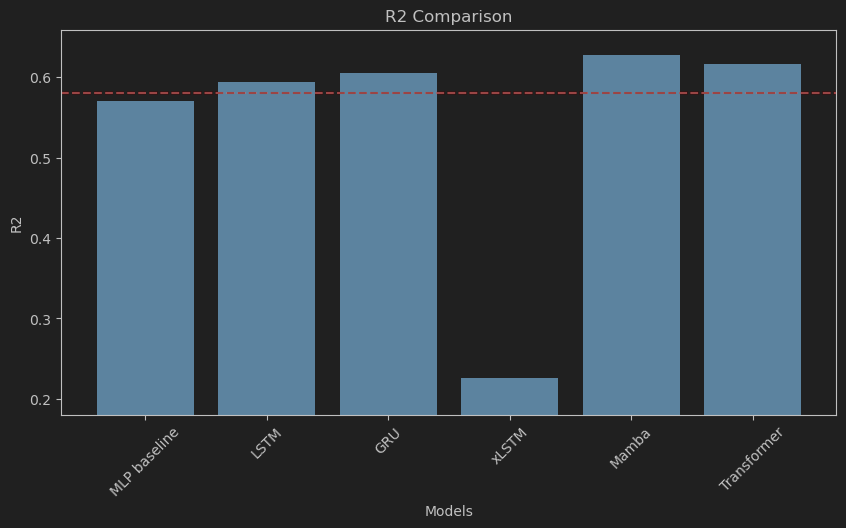

deep learning, mRNA, bioinformatics, master thesis, PyTorch

Designing optimal mRNA sequences to enhance the efficiency and effectiveness of protein synthesis in human cells is pivotal for advancing gene therapies and mRNA vaccines, with the potential to reduce side effects. A key factor in this design process is understanding the efficiency of mRNA translation into protein, for which the Protein-to-mRNA (PTR) ratio serves as a proxy. This master's thesis benchmarks and optimizes state-of-the-art deep learning architectures to classify whether a given mRNA sequence results in low or high protein expression in specific human tissues. Initially, several established and novel architectures are evaluated using a dataset comprising thousands of mRNA sequences and their corresponding PTR ratios across 29 human tissues. The most promising model is then further optimized through hyperparameter tuning, the incorporation of extended features such as secondary structure predictions and the application of advanced training techniques, including unsupervised pretraining. Results show that although advanced sequence-based deep learning models are capable of predicting PTR ratios across tissues, they do not outperform simpler codon frequency-based models. This finding suggests that codon frequencies capture most of the relevant information required for PTR classification, which is aligned with previous research. Notably, a frequency-based Multilayer Perceptron (MLP) significantly outperforms both sophisticated sequence-based architectures and prior shallow frequency-based models, demonstrating the potential of deep learning when codon frequencies are used as input features. Nonetheless, future work is needed to further investigate the deterministic genomic mechanisms underlying translation efficiency and to enhance predictive performance.

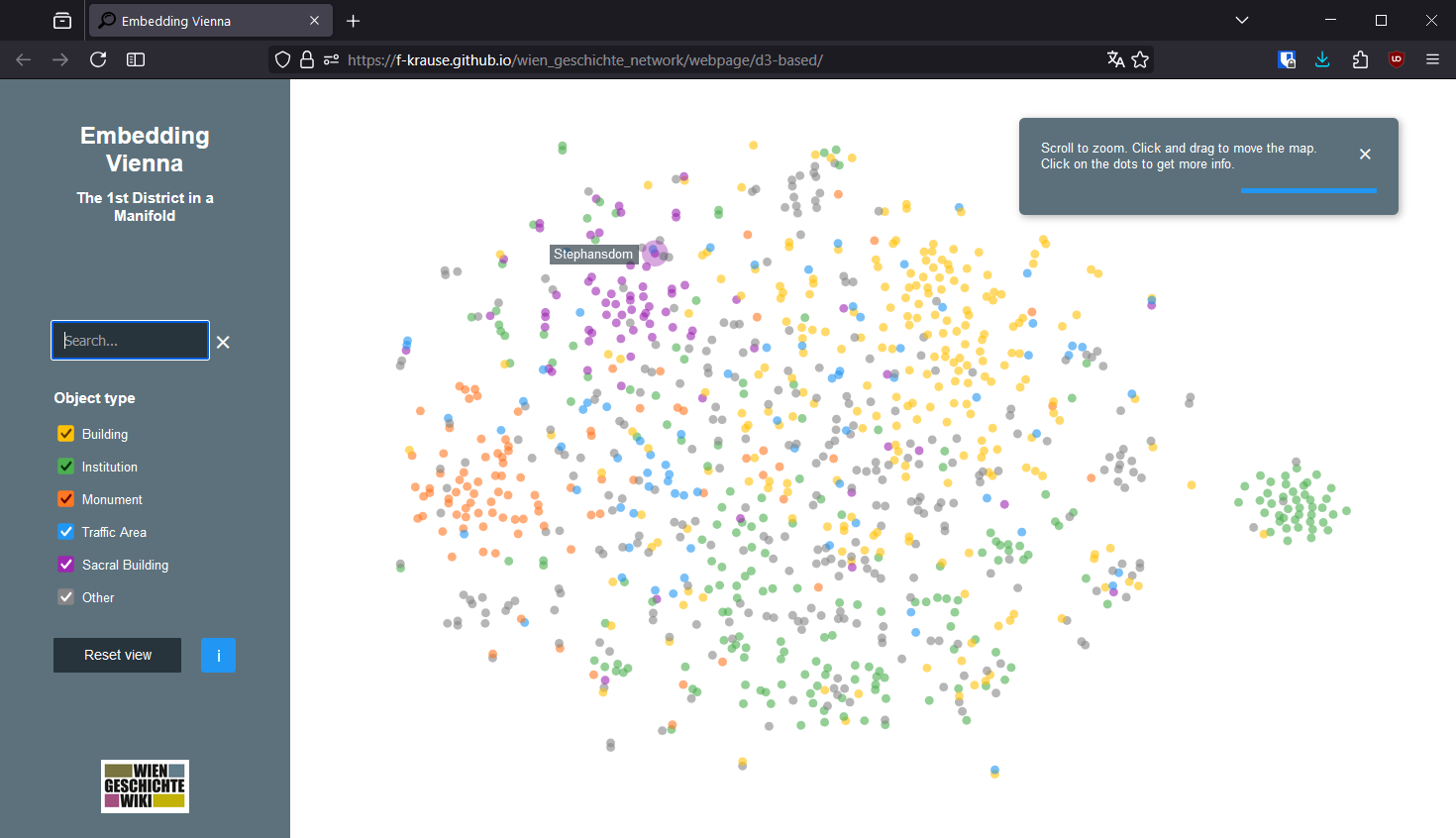

NLP, embeddings, t-SNE, interactive visualization, D3

This project presents an innovative interactive visualization tool designed to explore the historical objects of Vienna’s first district. Leveraging sentence transformers to embed Wien Geschichte Wiki articles, the tool organizes hundreds of historical objects into a meaningful 2D space using t-SNE. Enabling a broader audience to intuitively and playfully discover and analyze the relationships of Vienna's historical landscape.

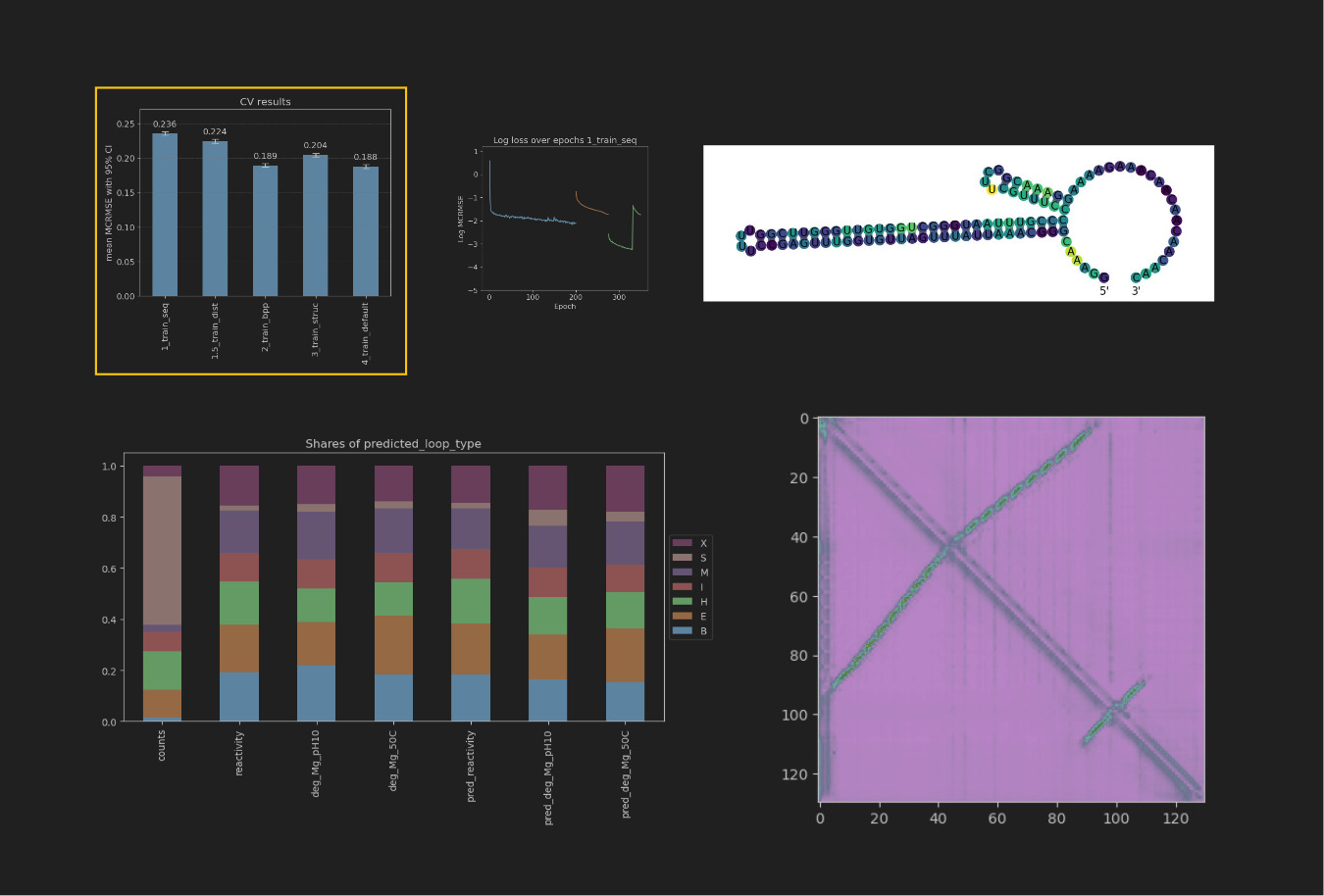

deep learning, transformers, PyTorch, explainable AI, bioinformatics

The goal of this project is to utilize deep learning models to predict the likelihood of a base breaking in an mRNA sequence, as used in COVID-19 vaccines. Understanding and predicting mRNA stability is crucial for enhancing the efficacy and longevity of these vaccines. Additionally, the project aims to provide explanations for the model's predictions regarding base instability. This will be achieved by examining the attention weights of various models in conjunction with exploratory data analysis. These explanations can offer valuable insights into the underlying biological mechanisms and potentially guide the design of more stable mRNA sequences. The main insights suggest that the secondary structure of the mRNA significantly influences its degradation likelihood.

transfer learning, YOLOv5, object detection, distance estimation

The project aimed at developing an algorithm for detecting license plates in real-time footage from car cameras to estimate distances to other vehicles. Images of cars with standardized license plates were used for training, as well as their corresponding distances from the camera. The PyTorch based YOLOv5 architecture for object detection was utilized and fine-tuned for license plate detection with data augmentation. By applying triangle similarity calculations and angle correction refinements, distances could be accurately estimated. The best model achieved an 85% accuracy rate with a 5% tolerance in estimating distances to cars in front, showing potential for deployment in advanced driver-assistance systems.

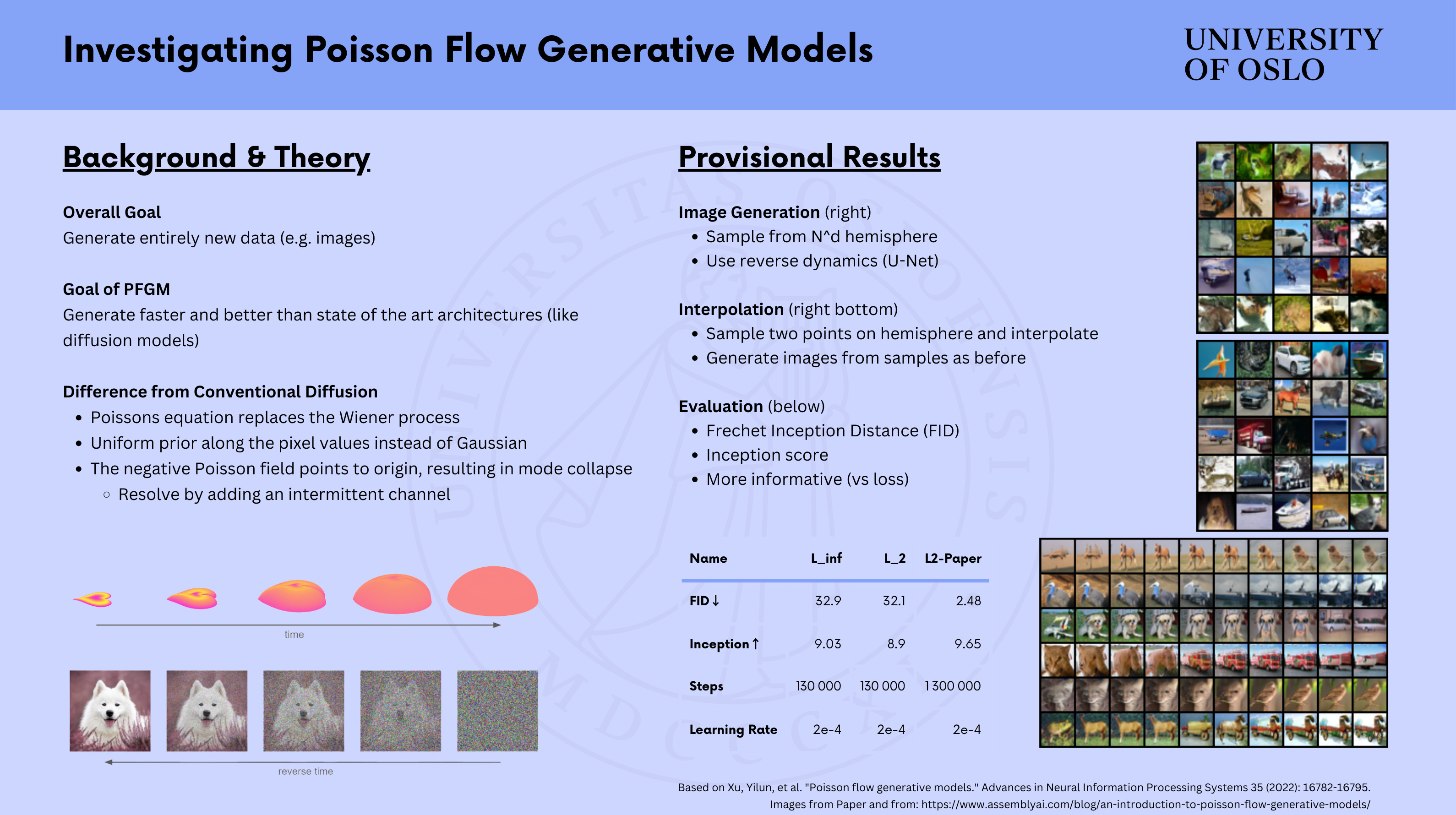

generative AI, deep learning, diffusion

The overall goal was to generate entirely new images using the PFGM (Poisson Flow Generative Model) diffusion approach. It aims to outperform existing diffusion models by using Poisson's equation instead of the Wiener process. Employing a uniform prior, which is thought to be closer to the real data range. PFGM samples from a hemisphere for image generation and utilizes reverse dynamics with a U-Net. The replication of paper results was hindered by limited resources, though satisfactory results could be obtained. Finally, attempts were made to enhance the PFGM architecture by using a different prior.

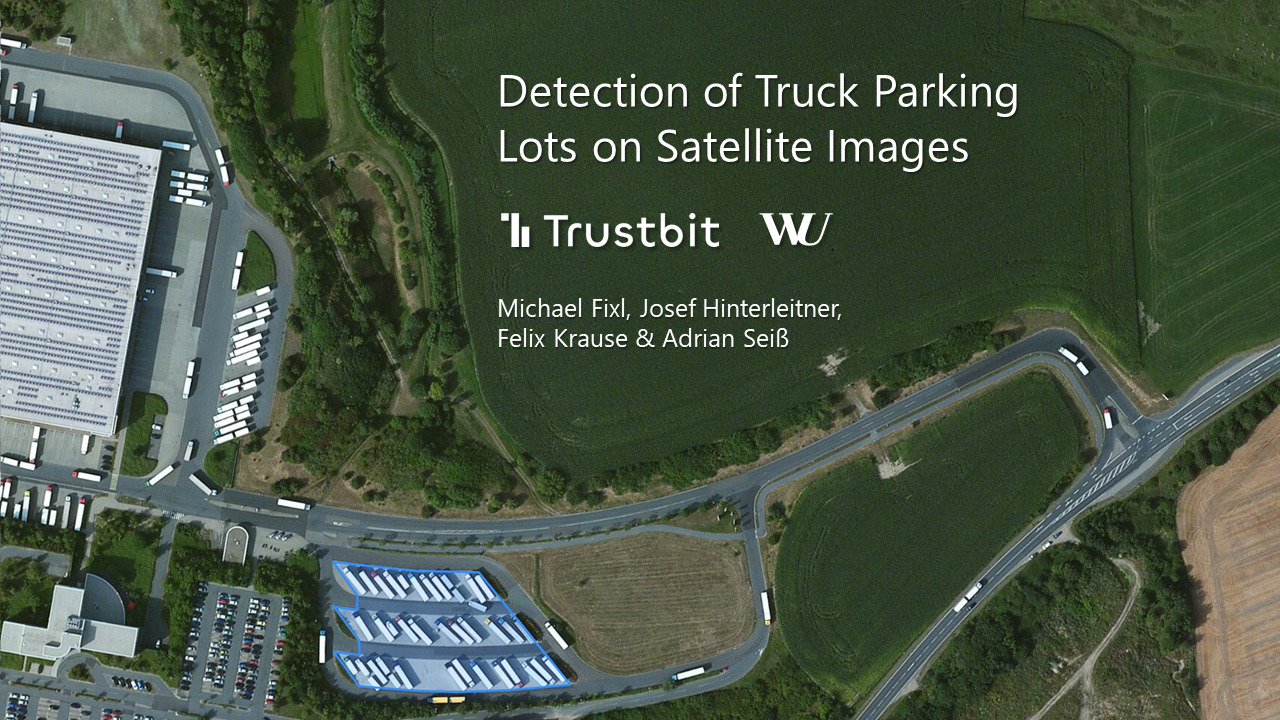

transfer learning, image segmentation, satellite images

This project focuses on the detection of truck parking lots on satellite images for logistics optimization. Complex AI algorithms - or more specifically deep learning models for image segmentation - were trained to predict parking lot shapes. PSPNet initially showed promising results but lacked generalizability. Upon further testing, better precision and generalizability were demonstrated by the LinkNet architecture, achieving a mean intersection over union (mIoU) of up to 75%. The project exemplifies an innovative approach to leveraging satellite imagery and machine learning for cost-effective estimation of parking areas' shapes, offering potential benefits for transport operations optimization based on GPS signals.

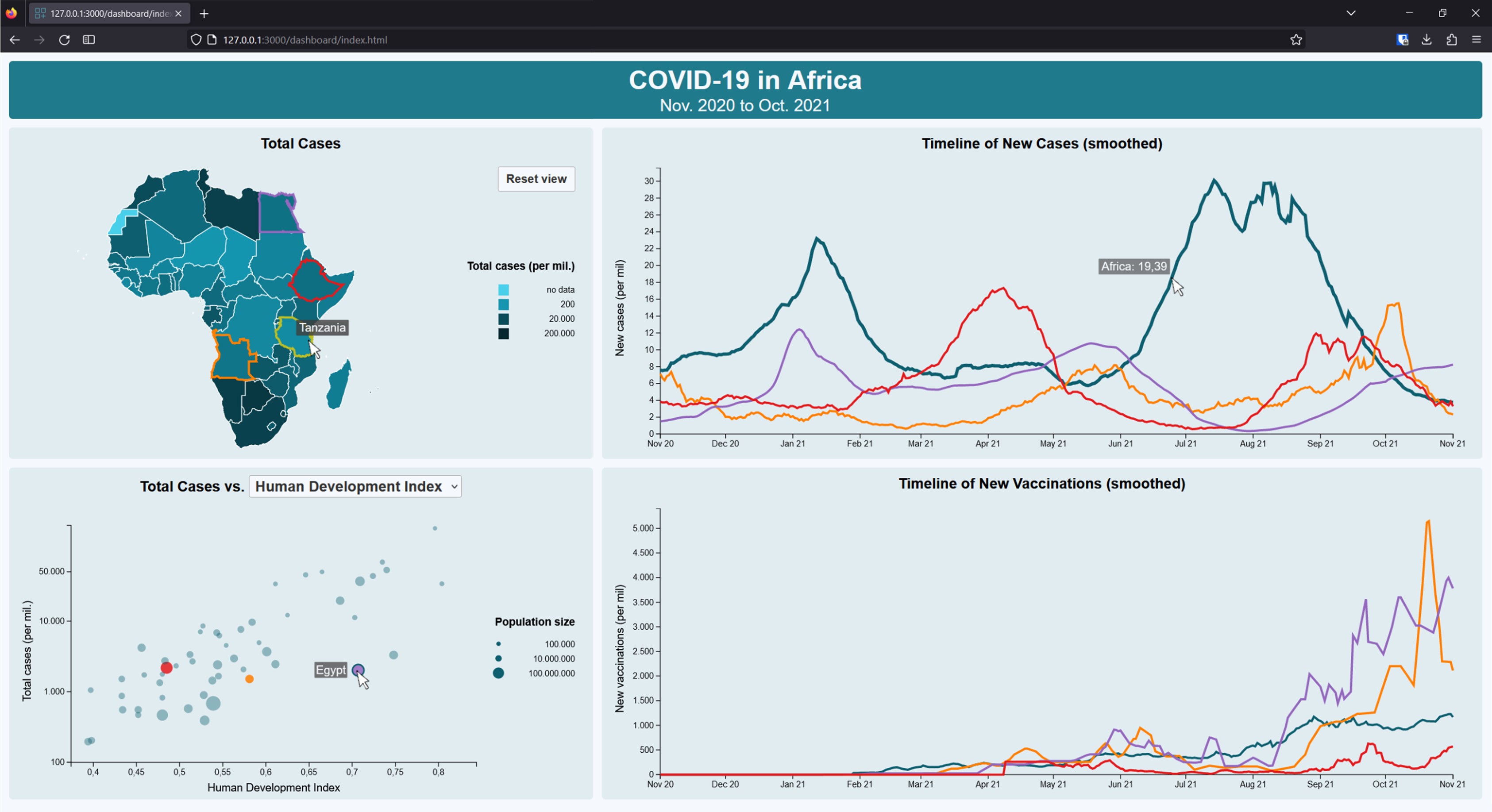

D3, dashboard design, interactivity

Here, the task was to create a user-friendly interface displaying COVID-19 data in Africa. The solution involves building a static webpage using HTML, CSS, and JavaScript, and employing D3 for interactivity and cross-effects in the plots. The resulting dashboard consists of a map view of Africa showcasing total COVID-19 cases, a timeline of new cases and vaccinations, and a bubble chart demonstrating the correlation between socio-economic indicators and total cases. If a country is selected, it will be highlighted across all the plots for easy comparison.

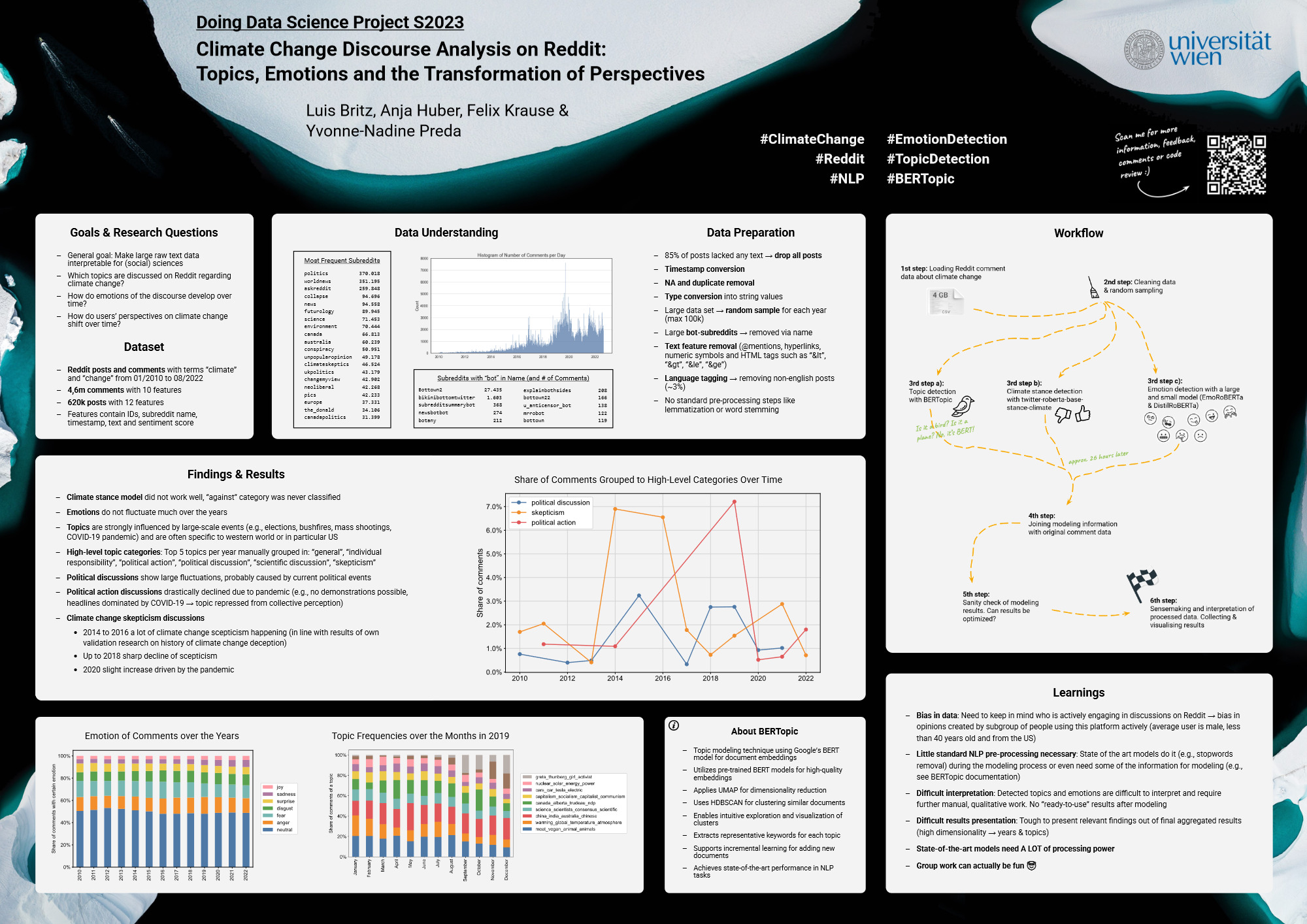

NLP, BERTopic, sentiment

The goal of this analysis is to examine the emotions and perspectives expressed in Reddit comments about "climate change" over time. The data used consists of 4.6 million relevant comments from January 2010 to August 2022. The solution involves using BERT-based pre-trained models for emotion detection and BERTopic for topic detection. The results show that emotions remain relatively stable over the years, while topics are heavily influenced by major events such as elections, bushfires, mass shootings, and the COVID-19 pandemic. Many topics are specific to the western world, particularly the United States.

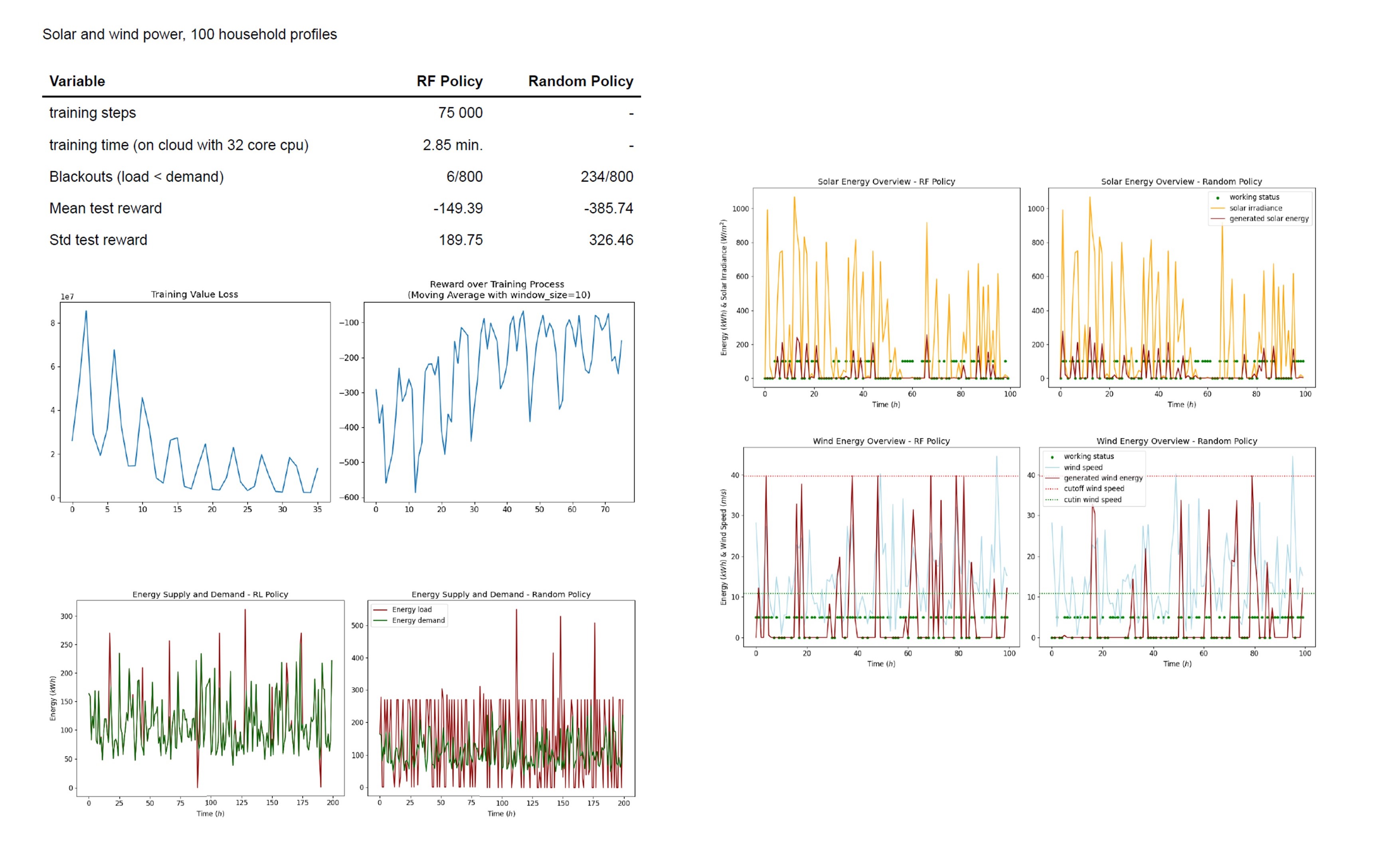

agent optimization, DRL, PPO

This project aims to enhance energy management within a microgrid through the implementation of a reinforcement learning model. By integrating solar, wind, gas turbine, battery storage, and grid energy trading, the goal is to minimize operational costs while ensuring a reliable power supply and maximizing renewable energy usage. Leveraging data from several hundred household profiles, including solar and wind generation, energy demand, and grid prices, a Deep Reinforcement Learning model using PPO was utilized. Through simulations, the model exhibited notable improvements in cost reduction and energy efficiency across various scenarios. Ultimately demonstrating the potential of reinforcement learning in optimizing microgrid energy systems for increased sustainability and economic benefits.

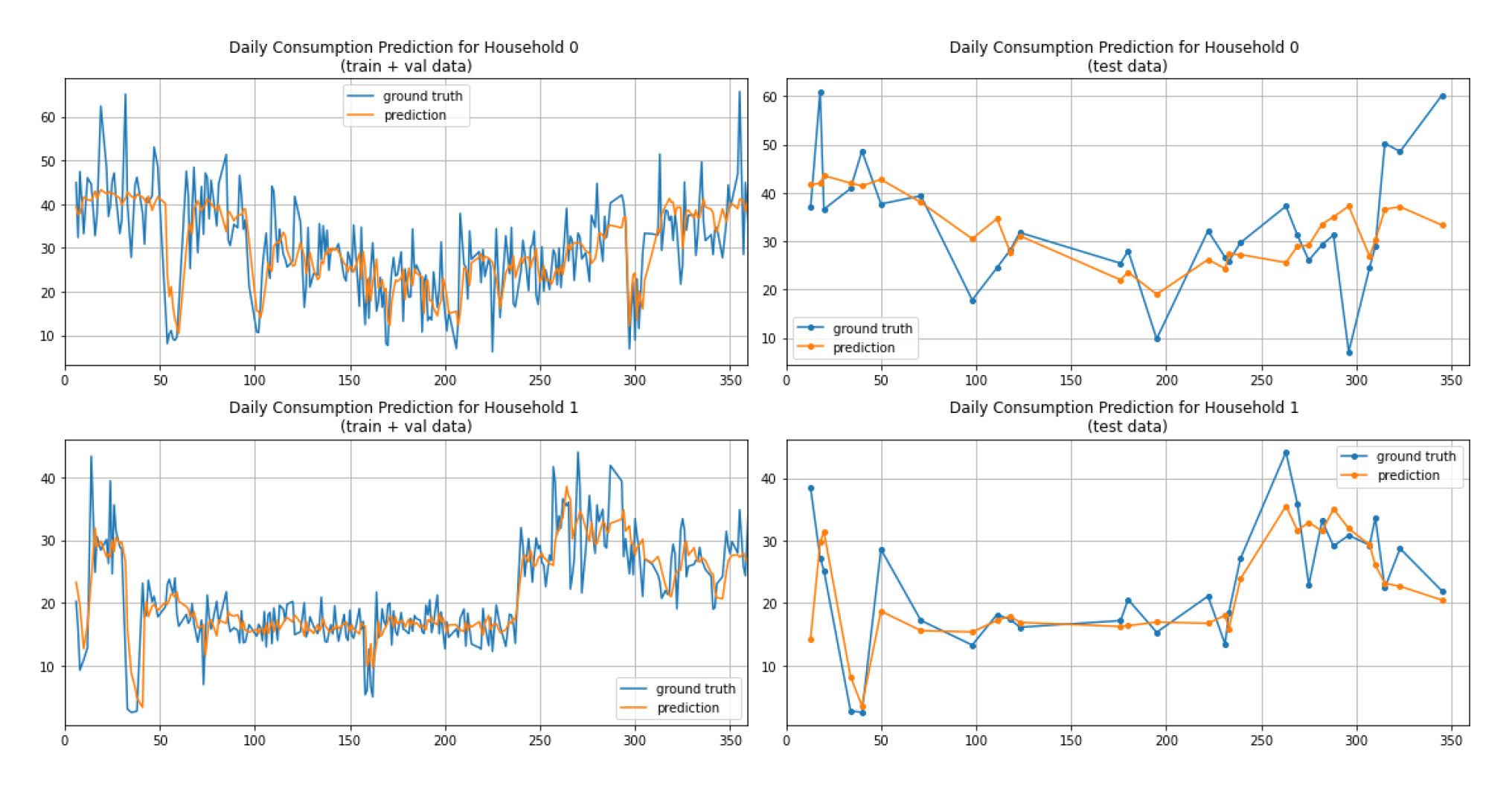

deep learning, privacy, neural networks, forecasts, PyTorch

The project aimed to predict and classify appliance energy consumption using federated learning. Daily energy consumption data of 50 households was processed. Recurrent neural networks (RNN) and long short-term memory (LSTM) models were built with PyTorch and trained decentralized to preserve privacy. By experimenting with hyperparameters and activation functions model performance could be optimized. The main results showed that LSTM outperformed RNN in prediction quality, though with higher training time. Federated learning was shown to be efficient in preserving data privacy and achieving comparable final model performance to traditional training methods in this implementation.